使用LVS实现负载均衡

本文主要讲述一下LVS的基本概念以及相关原理。

1. LVS简介

LVS是Linux Virtual Server的简写,是章文嵩博士1998年发起的一个开源项目。Internet的快速增长使多媒体网络服务器面对的访问数量快速增加,服务器需要具备提供大量并发访问服务的能力,因此对于大负载的服务器来讲,CPU、IO处理能力很快会成为瓶颈。由于单台服务器的性能总是有限的,简单的提高硬件性能并不能真正解决这个问题。为此,必须采用多服务器和负载均衡技术才能满足大量并发访问的需要。Linux虚拟服务器(Linux Virtual Server, LVS)使用负载均衡技术将多台服务器组成一个虚拟服务器。它为适应快速增长的网络访问需求提供了一个负载能力易于扩展,而价格低廉的解决方案。lvs已经集成到Linux 2.6版本以上的内核中。LVS的负载能力特别强,优化空间特别大,lvs的变种DPVS据说是lvs性能的几倍,由爱奇艺开发,并广泛应用于爱奇艺IDC。其他负载均衡服务器还有nginx、haproxy、F5、Netscale。

LVS项目的一个基本目标就是:

Build a high-performance and highly available server for Linux using clustering technology, which provides good scalability, reliability and serviceability.

1.1 基本术语

我们在后续讲解LVS的过程中经常会涉及到一些术语,为方便理解及查阅,先在这里进行简单说明:

-

IPVS, ipvs, ip_vs: director主机上Linux内核的补丁代码

-

LVS, linux virtual server: 包括director和realservers。所有这些主机的集合称为virtual server,以透明的方式对外部终端(clients)提供服务

-

director: 运行ipvs代码的节点。客户端(clients)连接到director,然后其将接收到的数据包转发给real servers。这里director其实只是一个拥有一些特定规则的IP路由(ip router)。

-

realservers: 提供服务的主机,用于实际处理来自于客户端的请求

-

client: 连接到director VIP地址的客户端主机或应用程序

-

forwarding method: 转发方法(LVS-NAT,LVS-DR, LVS-Tun)。与普通的ip router相比, director的转发规则有些不同。forwarding method用于决定director如何将来自于client的数据包转发到realservers。

-

scheduling: director选择一台realserver的算法

1.2 virtual services, scheduling groups

如下是一个提供telnet和squid服务的LVS输出:

# ipvsadm

IP Virtual Server version 0.9.4 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP lvs.mack.net:squid rr

-> rs1.mack.net:squid Route 1 0 0

-> rs2.mack.net:squid Route 1 0 0

-> rs3.mack.net:squid Route 1 0 0

TCP lvs.mack.net:telnet rr

-> rs1.mack.net:telnet Route 1 0 0

-> rs2.mack.net:telnet Route 1 0 0上面的LVS提供两种虚拟服务(virtual services): telnet和squid。对应两台虚拟服务器(virtual servers),其中一台虚拟服务器用于提供telnet服务(拥有2台realservers),另一台用于提供squid服务(拥有3台realservers)。对于客户端来说,看到的就是2个服务(services),2台服务器(servers)

发送到每台虚拟服务器(virtual server)的请求都通过相应的策略(这里是rr,即round robin)转发到其所在调度组(scheduling group)的realservers上。这里对于telnet服务,其scheduling group对应rs1、rs2; 对于squid服务,其scheduling group对应rs1、rs2、rs3。telnet虚拟主机与squid虚拟主机的调度策略是相互独立的。

对于上面这样一种lvs配置可被扩展用于firewall mark(fwmark)。

1.3 LVS中的IPs/networks命名

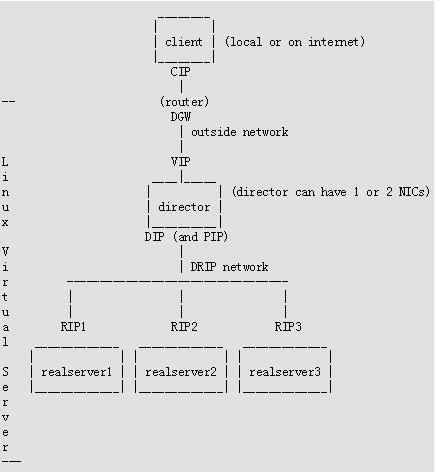

上图中的router是一个传统意义上的路由器,我们并不认为是LVS的一部分,因为通常我们并没有控制该路由器的权利。然而,假如你是一个付费客户的话,ISP提供商可能会愿意根据你的需求进行相应的配置。假如你有访问路由器的权利,通过路由器可以解决ARP问题并且安装相应的过滤规则。

如下是对上图众多IP的一个命名:

client IP = CIP virtual IP = VIP - the IP on the director that the client connects to) director IP = DIP - the IP on the director in the DIP/RIP (DRIP) network (this is the realserver gateway for LVS-NAT) realserver IP = RIP (and RIP1, RIP2...) the IP on the realserver director GW = DGW - the director's gw (only needed for LVS-NAT) (this can be the realserver gateway for LVS-DR and LVS-Tun)

这里VIP以及DIP都被设置为secondary IP(例如,在该网卡上有另外一个primary IP),因此当该director失效之后,对应的VIP/DIP可以被转移到另外一个副本director上。在我们初始建立单个director的时候,我们就可以将VIP/DIP设置为secondary IP,这样在后期我们可以更方便的通过建立director副本来应对failover状况。

对于一个双director的LVS,在DRIP网络中对于IP的命名如下:

primary director IP = PIP (the director which will be the master on bootup) secondary director IP = SIP (the director which will be the backup on bootup)

在系统启动的时候,DIP、PIP将会绑定到同一块网卡上;而当主director出现failover时,DIP又会转移到与SIP相同的网卡上。

另外在LVS中涉及到networks,我们通常会有如下术语:

-

DRIP network: the network containing the DIP and RIPs. (OK you come up with a better name.)

-

network facing the internet or the outside network: the network on the director which receives packets from the outside world. This shouldn’t be called the VIP network, as the VIP is also in the DRIP network (but not replying to arp calls) on the realservers in LVS-DR and LVS-Tun.

如下是我们的一个实际环境中的相关配置:

# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet 10.17.240.180/32 brd 10.17.240.180 scope global lo:0

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 38:90:a5:bd:e1:8a brd ff:ff:ff:ff:ff:ff

inet 10.17.240.166/24 brd 10.17.240.255 scope global eth0

valid_lft forever preferred_lft forever

inet 10.17.240.180/32 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::3a90:a5ff:febd:e18a/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP qlen 1000

link/ether 38:90:a5:bd:e1:8b brd ff:ff:ff:ff:ff:ff

inet 10.17.241.166/24 brd 10.17.241.255 scope global eth1

valid_lft forever preferred_lft forever

inet6 fe80::3a90:a5ff:febd:e18b/64 scope link

valid_lft forever preferred_lft forever

4: eno1: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN qlen 1000

link/ether 70:70:8b:5b:70:02 brd ff:ff:ff:ff:ff:ff

5: eno2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN qlen 1000

link/ether 70:70:8b:5b:70:03 brd ff:ff:ff:ff:ff:ffVIP地址为10.17.240.180,绑定到了网卡eth0, DIP为10.17.240.166,与VIP处于同一个网卡上。另外secondary director IP处于另外一台主机10.17.240.169上, 当primary director IP失效时,通过keepalive做failover转移,就将10.17.240.180这个VIP转移到10.17.240.169上。

2. LVS基础

LVS是一个服务器集群,对外部客户端来说是透明的,对外表现为一台虚拟服务器。LVS director是工作在4层协议之上的router,支持设置相应的路由规则,这一点与nginx等代理服务器是不同的(例如:connections并不会在director上发起或终止,其也并不会发送响应ACK,其仅仅是作为一个router)。

当一个新的连接请求被发送到LVS提供的service上时,director将会为该客户端请求选择一台合适的realserver来进行处理。之后,所有来自于该客户端的数据包都会穿过director到达指定的realserver。客户端与realserver之间的联系在整个tcp connection(或udp exchange)期间将一直保持。而对于下一个tcp连接,director将会选择一个新的realserver。这样当一个web浏览器连接到一个LVS提供的Web服务时,每一次可能都由不同的后端realserver来提供服务。

对LVS的管理是通过用户空间的ipvsadm和schedulers来完成的,我们可以通过这些工具来添加、移除realserver(或services),并处理相应的failout。注意: LVS其本身是不会侦测failure条件的,我们需要通过其他的手段来侦测失败情况的发生,然后使用ipvsadm来更新LVS的状态。

1) 什么是VIP?

director对客户端所展示的IP称为VIP(Virtual IP)。当一个客户端连接到VIP,director就会将客户端的数据包转发到一个特定的realserver上。

注: 当使用firewall mark(fwmark)时,VIP可能是一组IP,但是其仍然与单个IP有相同的概念

director可以有多个VIP。每一个VIP可以关联一个或多个service。比如你可以使用一个VIP来做HTTP/HTTPS的负载均衡,使用另外一个VIP来提供FTP服务。

2) LVS director is an L4 switch

在计算机网络层面,director是一个4层router。director在IP层进行路由判决,其所看到的仅仅是client与realservers之间的数据流,并不会对数据的内容进行任何的分析。

注意:LVS的每一个服务都有相应的端口(port),但是director并不会去监听(listen)这些端口

3) LVS数据包转发

director使用3种不同的方式来转发数据包:

-

LVS-NAT: 基于网络地址转换(NAT)

-

LVS-DR: DR即direct routing,会修改数据包的MAC地址,然后将包转发到realserver上

-

LVS-Tun: Tun即Tunnelling(隧道),数据包会进行IPIP封装,然后再转发到realserver上

对于LVS-DR和LVS-Tun转发来说,可能需要对realserver的ifconfig及routing tables做一些修改。对于LVS-NAT转发方式来说,realserver只需要有一个可工作的TCP/IP协议栈即可。

到目前为止,LVS可与任何类型的后端service协同工作,但是对于LVS-DR以及LVS-Tun转发方式来说,其不能处理由后端realserver发起的连接。

3. LVS环境搭建

在具体讲解如何搭建LVS环境之前,我们先简单的介绍一些知识要点及注意事项:

3.1 预备知识

1) 典型网络架构

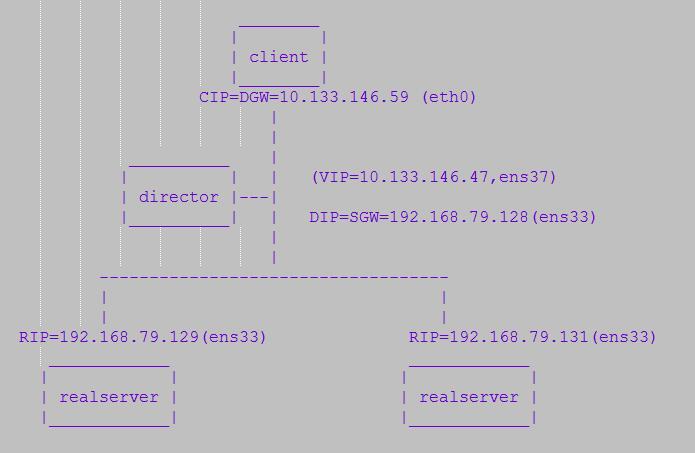

下图是一个典型的LVS-NAT搭建架构:

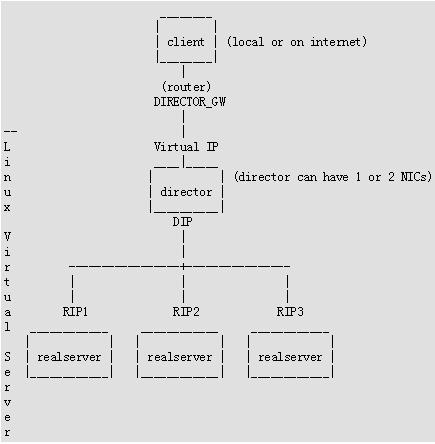

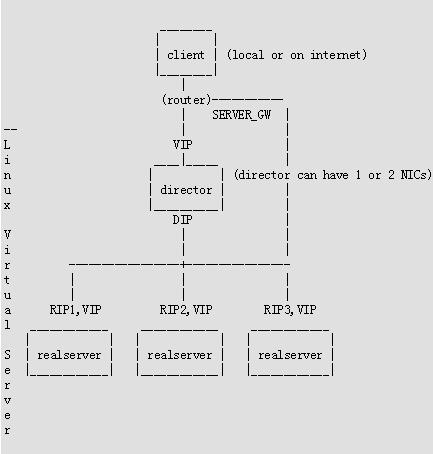

下图是一个典型的LVS-DR及LVS-Tun搭建架构:

对于director的failover处理,VIP与DIP需要被转移到一个备用的director上。这一般会使用keepalived等高可用性方案来进行

处理,这里不做介绍。

对于client、director、realserver的操纵系统版本,一般只需要是当前较新的Linux即可,对于三种转发方法: NAT、DR、Tun均可以支持。

注: 我们不考虑windows、或其他老本的Linux上搭建LVS

2) Linux内核版本选择

当前我们使用的Linux内核一般均为2.6之后的版本,这些版本一般已经自带了ip_vs patch。因此,我们不用像以前那样,需要自己重新编译Linux内核。一般我们只需要自己手动安装ipvsadm即可。

3) realservers是否需要处理arp问题?

-

LVS-NAT: 没有arp问题(因为realserver上并没有VIP)

-

对于LVS-DR(可能也包括LVS-Tun)转发模式下的realserver,需要处理arp问题

4) Why netmask=/32 for the VIP in LVS-DR?

当你使用LVS-DR模式时,被转发到realserver的数据包的目的地址会被设置为VIP。因此realserver需要以某一种方式来接收这些流量。其中一种方法就是添加一个interface到loopback设备上,并且隐藏该interface使得其不会响应ARP请求。

注: 这里必须要禁用arp,否则可能会出现————在一个网络中,多台主机的IP地址均为VIP

对于netmask也必须设置为255.255.255.255,因为 loopback 接口会对网络中的所有主机做出响应。比如IP地址为192.168.1.10,netmask为255.255.255.0将会导致该主机接收 192.168.1.0-192.168.1.255 范围内的所有数据包,而通常情况下我们并不需要在这个VIP上接收这些数据包。

5) 选择合适的LVS转发类型: LVS-NAT、LVS-DR、LVS-Tun

对于不同的LVS转发类型,必须要正确的配置realservers。主要需要考虑如下两点:

-

处理arp问题

-

设置默认网关

LVS-NAT: the DIP

LVS-DR, LVS-Tun: a router(anything but the director)由于LVS-NAT模式是最早被开发的,在早期人们采用此方法来建立LVS。对于2.2.x版本的内核,LVS-NAT比LVS-DR更耗CPU(因为LVS-NAT需要进行数据包的重写)。对于2.4.x版本的内核,LVS-NAT可以获得一个相对较好的性能,基本与LVS-DR同一级别。然而,如果只是用于简单的测试,LVS-NAT只需要对director主机进行patch,对于realservers主机的内核(Linux、Windows)并没有太大要求。

通常在生产环境下,我们的第一选择是LVS-DR转发模式。在经过简单测试之后,除非你确实需要LVS-NAT的一些特性,才会使用LVS-NAT。

如下是3种LVS转发模式的一些限制:

LVS-NAT LVS-Tun LVS-DR

realserver OS any must tunnel most

realserver mods none tunl must not arp lo must not arp

port remapping yes no no

realserver network private on internet local

(remote or local) -

realserver number low high high

client connnects to VIP VIP VIP

realserver default gw director own router own router

在选择转发模式的时候,按如下顺序考虑:

-

LVS-DR: 默认选择,拥有较高的效率,并且可以很容易的在大多数操作系统上搭建。realserver与director处于同一个网络(the realservers and director can arp each other)

-

LVS-NAT: 效率较低,具有较高的延迟性。realserver只需要支持基本的tcpip协议栈即可

-

LVS-Tun: realserver一般要求为Linux操作系统,效率与LVS-DR相当。可以支持realserver与director处于不同的网络

3.2 ipvsadm命令用法

ipvsadm命令的基本用法如下:

# ipvsadm --help ipvsadm v1.27 2008/5/15 (compiled with popt and IPVS v1.2.1) Usage: ipvsadm -A|E -t|u|f service-address [-s scheduler] [-p [timeout]] [-M netmask] [--pe persistence_engine] [-b sched-flags] ipvsadm -D -t|u|f service-address ipvsadm -C ipvsadm -R ipvsadm -S [-n] ipvsadm -a|e -t|u|f service-address -r server-address [options] ipvsadm -d -t|u|f service-address -r server-address ipvsadm -L|l [options] ipvsadm -Z [-t|u|f service-address] ipvsadm --set tcp tcpfin udp ipvsadm --start-daemon state [--mcast-interface interface] [--syncid sid] ipvsadm --stop-daemon state ipvsadm -h

1) 命令参数

--add-service -A 添加一个虚拟服务,后可接选项参数 --edit-service -E 修改一个虚拟服务,后可接选项参数 --delete-service -D 删除一个虚拟服务 --clear -C 清除整个lvs表 --restore -R 从标准输入中重新加载规则 --save -S 将规则保存到标准输出 --add-server -a 添加一个real server,后可接选项参数 --edit-server -e 修改一个real server,后可接选项参数 --delete-server -d 删除一个real server --list -L|-l 列出整个lvs表 --zero -Z 将一个service或所有services的计数器清零 --set tcp tcpfin udp 设置连接的超时时间值 --start-daemon 开启连接同步守护进程 --stop-daemon 关闭连接同步守护进程

2) 选项参数

--tcp-service -t service-address 服务的地址是host[:port] --udp-service -u service-address 服务的地址是host[:port] --fwmark-service -f fwmark fwmark是一个大于0的整数值 --ipv6 -6 fwmark entry使用ipv6 --scheduler -s scheduler 可选值为rr|wrr|lc|wlc|lblc|lblcr|dh|sed|nq,默认值的调度器是wlc --persistent -p [timeout] 设置持久连接,这个模式可以使来自客户的多个请求被发送到同一个真实服务器,通常被用于ftp或者ssl中 --netmask -M netmask 指定客户地址的子网掩码。用于将同属一个子网的客户请求转发到相同的服务器 --real-server -r server-server 用于指定real server的地址 --gatewaying -g 用于指定LVS采用DR模式 --ipip -i ipip封装,用于指定LVS采用Tun模式 --masquerading -m 用于指定LVS采用NAT模式 --weight -w weight 用于指定一个real server的权重 --u-threshold -x uthreshold 设置一个服务器的连接上限 --connection -c 列出当前的IPVS连接 --stats 列出当前统计信息 --rate 列出当前速率信息

4. LVS环境搭建示例

下面我们给出3种转发模式下LVS环境的搭建示例。当前我们的操作系统版本为:

# cat /etc/redhat-release CentOS Linux release 7.3.1611 (Core) # uname -a Linux localhost.localdomain 3.10.0-514.el7.x86_64 #1 SMP Tue Nov 22 16:42:41 UTC 2016 x86_64 x86_64 x86_64 GNU/Linux

4.1 使用LVS-DR转发模式建立LVS

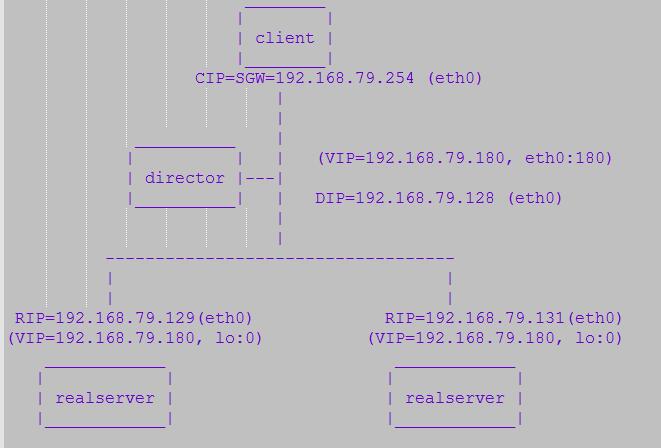

1) 测试环境

如下我们在一个单独的网络中(这里为192.168.79.0/24)建立LVS。3台主机之间需要能够相互ping通。

角 色 操作系统 IP设置

--------------------------------------------------------------------------------

Director Centos7.3 VIP: 192.168.79.180 (ens33:180)

DIP: 192.168.79.128 (ens33)

RealServer1 Centos7.3 RIP: 192.168.79.129 (ens33)

VIP: 192.168.79.180 (lo:0)

RealServer2 Centos7.3 RIP: 192.168.79.131 (ens33)

VIP: 192.168.79.180 (lo:0)

整体网络拓扑情况如下:

2) 软件安装

- 在Director上执行如下命令安装ipvsadmin

# lsmod | grep ip_vs //检查是否已经安装ipvs

# yum install ipvsadm

# ipvsadm -v

ipvsadm v1.27 2008/5/15 (compiled with popt and IPVS v1.2.1)- 在realserver1、realserver2上安装nginx

# yum install nginx安装后检查nginx工作正常。

3) Director配置

执行如下脚本(lvsDR_director_setup.sh):

#!/bin/bash

# setting up lvs(director)

ipv=/sbin/ipvsadm

vip=192.168.79.180

rs1=192.168.79.129

rs2=192.168.79.131

#set ip_forward OFF for lvs-dr director (1 on, 0 off)

#(there is no forwarding in the conventional sense for LVS-DR)

cat /proc/sys/net/ipv4/ip_forward

echo "0" >/proc/sys/net/ipv4/ip_forward

#director is not gw for realservers: leave icmp redirects on

echo 'setting icmp redirects (1 on, 0 off) '

echo "1" >/proc/sys/net/ipv4/conf/all/send_redirects

cat /proc/sys/net/ipv4/conf/all/send_redirects

echo "1" >/proc/sys/net/ipv4/conf/default/send_redirects

cat /proc/sys/net/ipv4/conf/default/send_redirects

echo "1" >/proc/sys/net/ipv4/conf/ens33/send_redirects

cat /proc/sys/net/ipv4/conf/ens33/send_redirects

#add ethernet device and routing for VIP 192.168.79.180

ifconfig ens33:180 $vip broadcast $vip netmask 255.255.255.0 up

route add -host $vip dev ens33:180

ifconfig ens33:180 #listing ifconfig info for VIP 192.168.79.180

#check VIP 192.168.79.180 is reachable from self (director)

/bin/ping -c 1 192.168.79.180

#listing routing info for VIP 192.168.79.180

/bin/netstat -rn

#setup_ipvsadm_table

$ipv -C

$ipv -A -t $vip:80 -s rr

$ipv -a -t $vip:80 -r $rs1 -g -w 1

ping -c 1 $rs1 #check realserver reachable from director

$ipv -a -t $vip:80 -r $rs2 -g -w 1

ping -c 1 $rs2

#displaying ipvsadm settings

/sbin/ipvsadm注: 对于rr模式,我们在添加realserver时也仍然可以添加一个-w选项,主要是为了在处理在某一个realserver出现故障时,我们可以将该值设为0,从而将该realserver提出集群。

执行完上述脚本毕后,查看我们当前的网络设置情况:

# ./lvsDR_director_setup.sh

0

setting icmp redirects (1 on, 0 off)

1

1

1

SIOCADDRT: File exists

ens33:180: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.79.180 netmask 255.255.255.0 broadcast 192.168.79.180

ether 00:0c:29:15:61:68 txqueuelen 1000 (Ethernet)

PING 192.168.79.180 (192.168.79.180) 56(84) bytes of data.

64 bytes from 192.168.79.180: icmp_seq=1 ttl=64 time=0.075 ms

--- 192.168.79.180 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.075/0.075/0.075/0.000 ms

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 10.133.146.1 0.0.0.0 UG 0 0 0 ens37

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens33

10.133.144.249 10.133.146.1 255.255.255.255 UGH 0 0 0 ens37

10.133.146.0 0.0.0.0 255.255.255.0 U 0 0 0 ens37

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 ens33

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

PING 192.168.79.129 (192.168.79.129) 56(84) bytes of data.

64 bytes from 192.168.79.129: icmp_seq=1 ttl=64 time=0.487 ms

--- 192.168.79.129 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.487/0.487/0.487/0.000 ms

PING 192.168.79.131 (192.168.79.131) 56(84) bytes of data.

64 bytes from 192.168.79.131: icmp_seq=1 ttl=64 time=0.336 ms

--- 192.168.79.131 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.336/0.336/0.336/0.000 ms

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP localhost.localdomain:http rr

-> 192.168.79.129:http Route 1 0 0

-> 192.168.79.131:http Route 1 0 0 注: 如果VIP在网卡上的绑定,可以执行类似如下命令

ip addr del 10.10.17.247/32 dev eth0

4) RealServer1配置

Realserver1上配置脚本(lvsDR_realserver_setup.sh):

#!/bin/bash

vip=192.168.79.180

#set_realserver_ip_forwarding to OFF (1 on, 0 off).

echo "0" >/proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#looking for DIP 192.168.79.128

ping -c 1 192.168.79.128

#install_realserver_vip

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

ifconfig lo:0

route add -host $vip dev lo:0

netstat -rn #listing routing info for VIP 192.168.79.180

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce执行结果如下:

# ./lvsDR_realserver_setup.sh

0

PING 192.168.79.128 (192.168.79.128) 56(84) bytes of data.

64 bytes from 192.168.79.128: icmp_seq=1 ttl=64 time=5.86 ms

--- 192.168.79.128 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 5.863/5.863/5.863/0.000 ms

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.79.180 netmask 255.255.255.255

loop txqueuelen 1 (Local Loopback)

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens33

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-d9757596964e

172.19.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-b991863fa6b0

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

5) RealServer2配置

Realserver2上配置脚本(lvsDR_realserver_setup.sh):

#!/bin/bash

vip=192.168.79.180

#set_realserver_ip_forwarding to OFF (1 on, 0 off).

echo "0" >/proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#looking for DIP 192.168.79.128

ping -c 1 192.168.79.128

#install_realserver_vip

ifconfig lo:0 $vip broadcast $vip netmask 255.255.255.255 up

ifconfig lo:0

route add -host $vip dev lo:0

netstat -rn #listing routing info for VIP 192.168.79.180

echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce

echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore

echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce执行配置脚本结果如下:

# ./lvsDR_realserver_setup.sh

0

PING 192.168.79.128 (192.168.79.128) 56(84) bytes of data.

64 bytes from 192.168.79.128: icmp_seq=1 ttl=64 time=1.10 ms

--- 192.168.79.128 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.106/1.106/1.106/0.000 ms

lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 192.168.79.180 netmask 255.255.255.255

loop txqueuelen 1 (Local Loopback)

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens34

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens34

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

6) 测试LVS-DR操作

在Director上执行ipvsadm命令:

# ipvsadm

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP localhost.localdomain:http rr

-> 192.168.79.129:http Route 1 0 0

-> 192.168.79.131:http Route 1 0 0 我们可以通过浏览器,按CTRL+F5强制刷新多次,可以看到会出现请求的切换; 或者用Fiddler来进行多次测试。

这里注意,其实Director上也同样可以充当RealServer,只需要简单的在该机器上执行上述lvsDR_realserver_setup.sh脚本即可。

7) LVS-DR模型的特点

-

RS 和 Director必须在同一个物理网络中

-

RS可以使用私有地址,也可以使用公网地址。如果使用公网地址,可以通过互联网对RIP进行直接访问

-

所有的请求报文必须经由Director Server,但响应报文必须不能经过Director Server。

-

RS的网关决不允许指向DIP(不允许数据包经过Director)

-

RS上的lo接口配置VIP地址

使用LVS-DR模式时需要注意: 保证前端路由将目标地址为VIP的报文统统发给Director Server,而不是RS。

4.2 使用LVS-NAT转发模式建立LVS

1) 测试环境

LVS-NAT转发模式是用于建立一个LVS集群最早所采用的方法。对于Linux内核版本为2.2的Director,在高负载的情况下,与LVS-DR、LVS-Tun模式相比,因为LVS-NAT转发模式需要消耗CPU来对数据包进行重写,因此其吞吐量较低(但在一些环境下仍然有用)。对于Linux内核版本为2.4(及以后)的Director,LVS-NAT与其他转发模式的性能接近。

如下我们在一个单独的网络中(这里为192.168.79.0/24)建立LVS。3台主机之间需要能够相互ping通。

角 色 操作系统 IP设置

--------------------------------------------------------------------------------

Director Centos7.3 VIP: 10.133.146.47 (ens37) //桥接模式

DIP: 192.168.79.128 (ens33) //nat模式

RealServer1 Centos7.3 RIP: 192.168.79.129 (ens33) //nat模式

RealServer2 Centos7.3 RIP: 192.168.79.131 (ens33) //nat模式

Client Windows CIP: 10.133.146.59

在Director这台VMWare虚拟机上,我们添加了两块网卡ens33以及ens37。在这里我们有两个网络:其中VIP与CIP处于同一个网络中,通过桥接方式相连;DIP、RIP1、RIP2处于同一个网络中,通过NAT方式相连。

整体网络拓扑情况如下:

2) 软件安装

- 在Director上执行如下命令安装ipvsadmin

# lsmod | grep ip_vs //检查是否已经安装ipvs

# yum install ipvsadm

# ipvsadm -v

ipvsadm v1.27 2008/5/15 (compiled with popt and IPVS v1.2.1)- 在realserver1、realserver2上安装nginx

# yum install nginx安装后检查nginx工作正常。

3) Director配置

由于这里我们需要用到iptables来进行NAT转发,因此我们需要启用iptables:

# sudo systemctl status firewalld # sudo systemctl start firewalld

执行如下脚本(lvsNAT_director_setup.sh):

#!/bin/bash

VIP=10.133.146.47

DIP=192.168.79.128

RIP1=192.168.79.129

RIP2=192.168.79.131

IPVSADM='/sbin/ipvsadm'

#set ip_forward ON for vs-nat director (1 on, 0 off).

cat /proc/sys/net/ipv4/ip_forward

echo "1" >/proc/sys/net/ipv4/ip_forward

#director is gw for realservers

#turn OFF icmp redirects (1 on, 0 off)

echo "0" > /proc/sys/net/ipv4/conf/all/send_redirects

cat /proc/sys/net/ipv4/conf/all/send_redirects

echo "0" > /proc/sys/net/ipv4/conf/default/send_redirects

cat /proc/sys/net/ipv4/conf/default/send_redirects

echo "0" > /proc/sys/net/ipv4/conf/ens33/send_redirects

cat /proc/sys/net/ipv4/conf/ens33/send_redirects

echo "0" > /proc/sys/net/ipv4/conf/ens37/send_redirects

cat /proc/sys/net/ipv4/conf/ens37/send_redirects

#director set NAT-firewall

#delete all rules in nat-chain

iptables -t nat -F

#Delete a user-defined chain

iptables -t nat -X

#iptables -t nat -A POSTROUTING -s 192.168.79.0/24 -o ens37 -j SNAT --to-source 10.133.146.47

iptables -t nat -A POSTROUTING -s 192.168.79.0/24 -o ens37 -j MASQUERADE

iptables -L -t nat

#clear ipvsadm tables

$IPVSADM -C

#install LVS services with ipvsadm

#add telnet to VIP with rr sheduling

$IPVSADM -A -t $VIP:80 -s rr

#first realserver

#forward http to realserver 'RIP1' using LVS-NAT (-m), with weight=1

$IPVSADM -a -t $VIP:80 -r $RIP1:80 -m -w 1

#checking if realserver is reachable from director

ping -c 1 $RIP1

#second realserver

#forward http to realserver 'RIP2' using LVS-NAT (-m), with weight=1

$IPVSADM -a -t $VIP:80 -r $RIP2:80 -m -w 1

#checking if realserver is reachable from director

ping -c 1 $RIP2

#list ipvsadm table

/sbin/ipvsadm执行完上述脚本毕后,查看我们当前的网络设置情况,主要有几个地方需要注意:

-

当前iptalbes nat表的设置

-

lvs转发模式的配置

# ./lvsNAT_director_setup.sh

1

0

0

0

0

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 192.168.79.0/24 anywhere

PING 192.168.79.129 (192.168.79.129) 56(84) bytes of data.

64 bytes from 192.168.79.129: icmp_seq=1 ttl=64 time=0.281 ms

--- 192.168.79.129 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.281/0.281/0.281/0.000 ms

PING 192.168.79.131 (192.168.79.131) 56(84) bytes of data.

64 bytes from 192.168.79.131: icmp_seq=1 ttl=64 time=0.232 ms

--- 192.168.79.131 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.232/0.232/0.232/0.000 ms

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP localhost.localdomain:http rr

-> 192.168.79.129:http Masq 1 0 0

-> 192.168.79.131:http Masq 1 0 0 4) RealServer1配置

Realserver1上配置脚本(lvsNAT_realserver_setup.sh):

#!/bin/sh

DIP=192.168.79.128

VIP=10.133.146.47

#---------mini-HOWTO-setup-LVS-NAT-realserver-------

#installing default gw $DIP for vs-nat'

/sbin/route add default gw $DIP

#show routing table

/bin/netstat -rn

#checking if DEFAULT_GW is reachable

ping -c 1 $DIP

#looking for VIP on director from realserver

ping -c 1 $VIP

#set_realserver_ip_forwarding to OFF (1 on, 0 off).

echo "0" >/proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#---------mini-HOWTO-setup-LVS-NAT-realserver------执行结果如下(请注意192.168.79.128这个新添加的默认网关地址):

# ./lvsNAT_realserver_setup.sh

SIOCADDRT: File exists

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.128 0.0.0.0 UG 0 0 0 ens33

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens33

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-d9757596964e

172.19.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-b991863fa6b0

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

PING 192.168.79.128 (192.168.79.128) 56(84) bytes of data.

64 bytes from 192.168.79.128: icmp_seq=1 ttl=64 time=1.29 ms

--- 192.168.79.128 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 1.290/1.290/1.290/0.000 ms

PING 10.133.146.47 (10.133.146.47) 56(84) bytes of data.

64 bytes from 10.133.146.47: icmp_seq=1 ttl=64 time=0.597 ms

--- 10.133.146.47 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.597/0.597/0.597/0.000 ms

05) RealServer2配置

Realserver2上配置脚本(lvsNAT_realserver_setup.sh):

#!/bin/sh

DIP=192.168.79.128

VIP=10.133.146.47

#---------mini-HOWTO-setup-LVS-NAT-realserver-------

#installing default gw $DIP for vs-nat'

/sbin/route add default gw $DIP

#show routing table

/bin/netstat -rn

#checking if DEFAULT_GW is reachable

ping -c 1 $DIP

#looking for VIP on director from realserver

ping -c 1 $VIP

#set_realserver_ip_forwarding to OFF (1 on, 0 off).

echo "0" >/proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#---------mini-HOWTO-setup-LVS-NAT-realserver------执行结果如下(请注意192.168.79.128这个新添加的默认网关地址):

# ./lvsNAT_realserver_setup.sh

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.128 0.0.0.0 UG 0 0 0 ens34

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens34

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens34

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 lo

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

PING 192.168.79.128 (192.168.79.128) 56(84) bytes of data.

64 bytes from 192.168.79.128: icmp_seq=1 ttl=64 time=0.392 ms

--- 192.168.79.128 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.392/0.392/0.392/0.000 ms

PING 10.133.146.47 (10.133.146.47) 56(84) bytes of data.

64 bytes from 10.133.146.47: icmp_seq=1 ttl=64 time=0.347 ms

--- 10.133.146.47 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.347/0.347/0.347/0.000 ms

06) 测试LVS-NAT操作

在Director上执行ipvsadm命令:

# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.133.146.47:80 rr

-> 192.168.79.129:80 Masq 1 0 0

-> 192.168.79.131:80 Masq 1 0 0 我们可以通过浏览器,请求VIP(10.133.146.47)上的HTTP服务来进行验证。另外,我们可以使用以下命令查看相应的转发状态:

# ipvsadm -ln --stats

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Conns InPkts OutPkts InBytes OutBytes

-> RemoteAddress:Port

TCP 10.133.146.47:80 3 16 10 1018 2236

-> 192.168.79.129:80 1 2 0 120 0

-> 192.168.79.131:80 2 14 10 898 2236

# ipvsadm -ln --rate

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port CPS InPPS OutPPS InBPS OutBPS

-> RemoteAddress:Port

TCP 10.133.146.47:80 0 1 0 34 69

-> 192.168.79.129:80 0 0 0 6 0

-> 192.168.79.131:80 0 0 0 28 697) NAT模式的特点

RS和DIP处于同一私有网络中(私有IP地址),并且RS的网关要指向DIP; 请求和相应报文都要经由director转发,在极高负载的场景中,director可能会成为系统瓶颈。NAT模式支持端口映射; RS可以使用任意的操作系统(OS);Director需要两块网卡(属于典型的lan/wan),且RIP与Director必须有一块网卡在同一IP网络; VIP需要配置在Director接收客户端请求的网卡上,且直接对外提供服务。

优点: 实现方便简单,也容易理解

缺点: Director会成为一个优化的瓶颈,所有的报文都要经过Director,因此,负载后端RS的台数在10~20台左右(随服务器性能而定),如果Director坏掉,后果严重,不支持异地容灾。

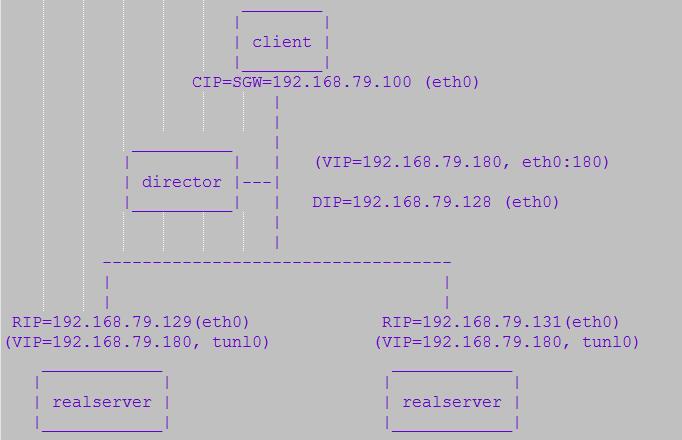

4.3 使用LVS-TUN模式建立LVS

1) 测试环境

如下我们在一个单独的网络中(这里为192.168.79.0/24)建立LVS。3台主机之间需要能够相互ping通。

角 色 操作系统 IP设置

--------------------------------------------------------------------------------

Director Centos7.3 VIP: 192.168.79.180 (ens33:180)

DIP: 192.168.79.128 (ens33)

RealServer1 Centos7.3 RIP: 192.168.79.129 (ens33)

VIP: 192.168.79.180 (tunl0)

RealServer2 Centos7.3 RIP: 192.168.79.131 (ens33)

VIP: 192.168.79.180 (tunl0)

整体网络拓扑情况如下:

2) 软件安装

- 在Director上执行如下命令安装ipvsadmin

# lsmod | grep ip_vs //检查是否已经安装ipvs

# yum install ipvsadm

# ipvsadm -v

ipvsadm v1.27 2008/5/15 (compiled with popt and IPVS v1.2.1)- 在realserver1、realserver2上安装nginx

# yum install nginx安装后检查nginx工作正常。

3) Director配置

执行如下脚本(lvsTUN_director_setup.sh):

#!/bin/bash

# setting up lvs(director)

ipv=/sbin/ipvsadm

vip=192.168.79.180

dip=192.168.79.128

rs1=192.168.79.129

rs2=192.168.79.131

#set ip_forward OFF for lvs-dr director (1 on, 0 off)

echo "0" > /proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#director is not gw for realservers: leave icmp redirects on

echo 'setting icmp redirects (1 on, 0 off) '

echo "1" > /proc/sys/net/ipv4/conf/all/send_redirects

cat /proc/sys/net/ipv4/conf/all/send_redirects

echo "1" > /proc/sys/net/ipv4/conf/default/send_redirects

cat /proc/sys/net/ipv4/conf/default/send_redirects

echo "1" > /proc/sys/net/ipv4/conf/eth0/send_redirects

cat /proc/sys/net/ipv4/conf/eth0/send_redirects

# not use iptables

iptables -t nat -F

iptables -t nat -L

setenforce 0

#add ethernet device and routing for VIP 192.168.79.180

ifconfig ens33:180 $vip broadcast $vip netmask 255.255.255.0 up

route add -host $vip dev ens33:180

ifconfig ens33:180 #listing ifconfig info for VIP 192.168.79.180

#check VIP 192.168.79.180 is reachable from self (director)

/bin/ping -c 1 $vip

#listing routing info for VIP 192.168.79.180

/bin/netstat -rn

#setup_ipvsadm_table

$ipv -C

$ipv -A -t $vip:80 -s rr

$ipv -a -t $vip:80 -r $rs1:80 -i -w 1

ping -c 1 $rs1 #check realserver reachable from director

$ipv -a -t $vip:80 -r $rs2:80 -i -w 1

ping -c 1 $rs2

#displaying ipvsadm settings

/sbin/ipvsadm执行该脚本:

# ./lvsTUN_director_setup.sh

0

setting icmp redirects (1 on, 0 off)

1

1

./lvsTUN_director_setup.sh: line 23: /proc/sys/net/ipv4/conf/eth0/send_redirects: No such file or directory

cat: /proc/sys/net/ipv4/conf/eth0/send_redirects: No such file or directory

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

setenforce: SELinux is disabled

SIOCADDRT: File exists

ens33:180: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.79.180 netmask 255.255.255.0 broadcast 192.168.79.180

ether 00:0c:29:15:61:68 txqueuelen 1000 (Ethernet)

PING 192.168.79.180 (192.168.79.180) 56(84) bytes of data.

64 bytes from 192.168.79.180: icmp_seq=1 ttl=64 time=0.068 ms

--- 192.168.79.180 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.068/0.068/0.068/0.000 ms

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens33

0.0.0.0 10.133.146.1 0.0.0.0 UG 0 0 0 ens37

10.133.144.249 10.133.146.1 255.255.255.255 UGH 0 0 0 ens37

10.133.146.0 0.0.0.0 255.255.255.0 U 0 0 0 ens37

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 ens33

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 ens33

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

PING 192.168.79.129 (192.168.79.129) 56(84) bytes of data.

64 bytes from 192.168.79.129: icmp_seq=1 ttl=64 time=0.273 ms

--- 192.168.79.129 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.273/0.273/0.273/0.000 ms

PING 192.168.79.131 (192.168.79.131) 56(84) bytes of data.

64 bytes from 192.168.79.131: icmp_seq=1 ttl=64 time=0.198 ms

--- 192.168.79.131 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.198/0.198/0.198/0.000 ms

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP localhost.localdomain:http rr

-> 192.168.79.129:http Tunnel 1 0 0

-> 192.168.79.131:http Tunnel 1 0 0 4) RealServer1配置

Realserver1上配置脚本(lvsTUN_realserver_setup.sh):

#!/bin/bash

vip=192.168.79.180

dip=192.168.79.128

#install ipip kernel module

modprobe ipip

modinfo ipip

#set ip_forward OFF for lvs-dr director (1 on, 0 off)

echo "0" > /proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#install_realserver_vip

/sbin/ifconfig tunl0 $vip broadcast $vip netmask 255.255.255.255 up

#/sbin/route add -host $vip dev tunl0

ifconfig tunl0 #listing ifconfig info for VIP 192.168.79.180

#check VIP 192.168.79.180 is reachable from self (director)

/bin/ping -c 1 $vip

#listing routing info for VIP 192.168.79.180

/bin/netstat -rn

#ignore arp

echo 1 > /proc/sys/net/ipv4/conf/tunl0/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/tunl0/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

#No source validation

echo 0 > /proc/sys/net/ipv4/conf/tunl0/rp_filter

echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter执行该脚本:

# ./lvsTUN_realserver_setup.sh

filename: /lib/modules/3.10.0-514.el7.x86_64/kernel/net/ipv4/ipip.ko

alias: netdev-tunl0

alias: rtnl-link-ipip

license: GPL

rhelversion: 7.3

srcversion: C857E931D7314D7EAEC0761

depends: ip_tunnel,tunnel4

intree: Y

vermagic: 3.10.0-514.el7.x86_64 SMP mod_unload modversions

signer: CentOS Linux kernel signing key

sig_key: D4:88:63:A7:C1:6F:CC:27:41:23:E6:29:8F:74:F0:57:AF:19:FC:54

sig_hashalgo: sha256

parm: log_ecn_error:Log packets received with corrupted ECN (bool)

0

tunl0: flags=193<UP,RUNNING,NOARP> mtu 1480

inet 192.168.79.180 netmask 255.255.255.255

tunnel txqueuelen 1 (IPIP Tunnel)

RX packets 96 bytes 19403 (18.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 26 dropped 0 overruns 0 carrier 0 collisions 26

PING 192.168.79.180 (192.168.79.180) 56(84) bytes of data.

64 bytes from 192.168.79.180: icmp_seq=1 ttl=64 time=0.046 ms

--- 192.168.79.180 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.046/0.046/0.046/0.000 ms

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens33

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-d9757596964e

172.19.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-b991863fa6b0

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr05) RealServer2配置

Realserver1上配置脚本(lvsTUN_realserver_setup.sh):

#!/bin/bash

vip=192.168.79.180

dip=192.168.79.128

#install ipip kernel module

modprobe ipip

modinfo ipip

#set ip_forward OFF for lvs-dr director (1 on, 0 off)

echo "0" > /proc/sys/net/ipv4/ip_forward

cat /proc/sys/net/ipv4/ip_forward

#install_realserver_vip

/sbin/ifconfig tunl0 $vip broadcast $vip netmask 255.255.255.255 up

/sbin/route add -host $vip dev tunl0

ifconfig tunl0 #listing ifconfig info for VIP 192.168.79.180

#check VIP 192.168.79.180 is reachable from self (director)

/bin/ping -c 1 $vip

#listing routing info for VIP 192.168.79.180

/bin/netstat -rn

#ignore arp

echo 1 > /proc/sys/net/ipv4/conf/tunl0/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/tunl0/arp_announce

echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore

echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce

#No source validation

echo 0 > /proc/sys/net/ipv4/conf/tunl0/rp_filter

echo 0 > /proc/sys/net/ipv4/conf/all/rp_filter执行上述脚本:

# ./lvsTUN_realserver_setup.sh

filename: /lib/modules/3.10.0-514.el7.x86_64/kernel/net/ipv4/ipip.ko

alias: netdev-tunl0

alias: rtnl-link-ipip

license: GPL

rhelversion: 7.3

srcversion: C857E931D7314D7EAEC0761

depends: ip_tunnel,tunnel4

intree: Y

vermagic: 3.10.0-514.el7.x86_64 SMP mod_unload modversions

signer: CentOS Linux kernel signing key

sig_key: D4:88:63:A7:C1:6F:CC:27:41:23:E6:29:8F:74:F0:57:AF:19:FC:54

sig_hashalgo: sha256

parm: log_ecn_error:Log packets received with corrupted ECN (bool)

0

SIOCADDRT: File exists

tunl0: flags=193<UP,RUNNING,NOARP> mtu 1480

inet 192.168.79.180 netmask 255.255.255.255

tunnel txqueuelen 1 (IPIP Tunnel)

RX packets 554 bytes 170998 (166.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

PING 192.168.79.180 (192.168.79.180) 56(84) bytes of data.

64 bytes from 192.168.79.180: icmp_seq=1 ttl=64 time=0.062 ms

--- 192.168.79.180 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.062/0.062/0.062/0.000 ms

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

0.0.0.0 192.168.79.2 0.0.0.0 UG 0 0 0 ens34

192.168.79.0 0.0.0.0 255.255.255.0 U 0 0 0 ens34

192.168.79.180 0.0.0.0 255.255.255.255 UH 0 0 0 tunl0

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr06) 测试LVS-TUN操作

在Director上执行ipvsadm命令:

# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.79.180:80 rr

-> 192.168.79.129:80 Tunnel 1 0 0

-> 192.168.79.131:80 Tunnel 1 0 0 这里注意,在Director上直接请求http://192.168.79.180是不会有任何返回的,这是因为在RealServer1、RealServer2上都绑定了该VIP,Realserver的响应是不会再经过Director的。

7) LVS-TUN模式特点

-

RIP、VIP、DIP全是公网地址

-

RS的网关不会也不可能指向DIP

-

所有的请求报文经由Director Server,但响应必须不能经过Director Server

-

RS必须支持隧道

5. 总结

总结:生产模式中常用为DR模式

-

NAT模式: 分发器分发给后端的Server,后端Server返回结果给分发器,结果由分发器再返回给Client,这样会导致LVS Server瓶颈

-

DR模式: 返回的结果不需要经过分发器,后端返回结果时,会根据mac地址直接给client

-

TUN模式: 必须全部使用公网资源,此模式比DR模式多了IP封装的开销

[参看]